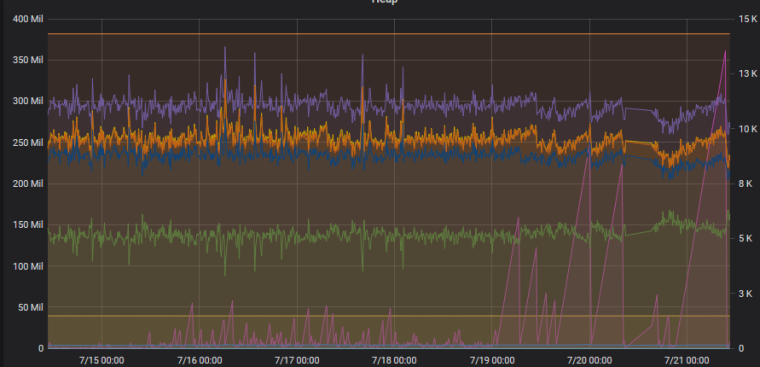

IVM heap usage & game objects

-

I found that if i disable my Observers scanning function my heap memory is no longer exceeded nearly so often. My observer scanning worked sort of like a radar, they would sweep the nearby rooms and enable vision in a room i could not currently see. Currently with the scanning active my heap was reset (memory exceeded) every ~25-100 ticks. With the scanning disabled i again can reach thousands of ticks on the same heap. ( It is still fluctuating a lot, but not going over the limit all the time).

Normally i have vision in about 230 rooms and when adding the Observer scanning, which means 30 more rooms will be added/removed each tick obviously had a huge impact. Without this scanning of rooms my heap is now much more stable. To me it seems like there is an issue when many rooms will be added/removed for only a tick at a time?

-

since ~21.6. heap/auto-gc problems (resulting into ~+30%CPU in average per tick for me) are back again on shard0. I already reduced my bases on shard0 in the past to prevent heap problems, but out of nowhere loosing every second tick a regular full tick execution is so cruel. It seems auto-gc is also firing when there is enough heap left. The auto-gc threshold seems to be on a much lower level now

-

@demawi said in IVM heap usage & game objects:

I have more problems with this: (don't know if it's also a heap problem?)

[00:06:38][shard0]Script execution timed out ungracefully [00:06:43][shard0]Script execution timed out ungracefully [00:06:48][shard0]Script execution timed out ungracefully [00:06:53][shard0]Script execution timed out ungracefully [00:06:58][shard0]Script execution timed out ungracefully [00:07:03][shard0]Script execution timed out ungracefully [00:07:07][shard0]Script execution timed out ungracefully [00:07:12][shard0]Script execution timed out ungracefullycurrently... each tick without getting to my code.. I already had such a phase a few days ago.where it lasted over 200 ticks..

This is exactly what im running into aswell. Ive already tried all kind of stuff to get it fixed in the past 2 weeks, nothing helps. When the Code is running for a while it starts to happen, and then seem to get more frequent until at some point every tick does give this error for up to 500 ticks in the worst case.

Reassigning Heap per shard like Cpu would only be fair, I do not want any Rooms on other shards.

-

Had no Issue for the last 2 days (most likely since the server was down on saturday), it just started again with the mail from 5:54

-

Update: After i started removing all left over structures in my remotes i am hardly getting any more "Isolate was disposed during execution due to memory limit". Before i removed all enemy structures in remotes my global lasted on average 50-100 ticks before the limit was reached. After i aggressively started clearing all abandoned rooms near me (ive cleared 70+ rooms now), the problem is as good as gone, my global can last for thousands+ of ticks again, mostly resetting when i upload code. This further strengthens the theory that it is the game objects that is a big problem when you have vision in many rooms, not player code. My heap graphs are still very noisy, but it is no longer reaching the limit randomly.

I started clearing the abandoned rooms the 7/19 and did no code changes relating to optimizing globals since then.

-

I really would like to put attention on this issue again. I was hoping the promised persistent game objects would completely change the heap behaviour/requirements. Now that the persistent game objects seem to take awhile the problems with heap persist.

I am at GCL34, everything on shard1, I have vision on ~230 rooms in which there are ~5100 structures, fielding a ~1000 creeps. My heap from tick to tick starts at 65% goes 90% and then back 65%. It seems to skip garbage collection when at 90% every so often resulting in hitting a memory limit reset. Sometimes it's 2 times per hour, sometimes it's 20~40 times per hour.

If I call

gc(true)every 3rd tick I loose a massive amount of CPU. At GCL32 I had a 3 step heap build up doing roughly a 55%, 70%, 85% cycle without missing collections, things ran massively better at that pattern. My CPU took a nose dive since.It seems to me the heap is just to small for V8 to do it's 'regular' garbage collection scheduling. I am still left wondering what on earth requires 250mb of heap space after garbage collection. That's more then 1 mb per room?

Could we possibly get more heap until the persistent game objects land?

-

@tun9an0 said in IVM heap usage & game objects:

I am still left wondering what on earth requires 250mb of heap space after garbage collection

Maybe the same that causes chromium processes to guzzle up all that RAM like there is no tomorrow? Since V8 is also what runs in those.

-

@orlet No, if your PC has plenty of free memory Chrome may liberally allocate large heaps. It doesn't mean anything in context of this issue where the IVM is starving for heap (aka no free space).

-

I gained another GCL and now call

gc(true)every tick. It ticks 64% and then 85~95%. I still get frequent resets on code running on top of the 85~90% (which I can't get rid of).Please some response to this would be nice...

-

Back up to bouncing off the memory limit here. ~230 rooms visible. ~5000 structures in Game.structures. ~850 creeps in Game.creeps. On top of this there will be plenty of enemy creeps and structures.

What's the ETA for persistent game objects?

-

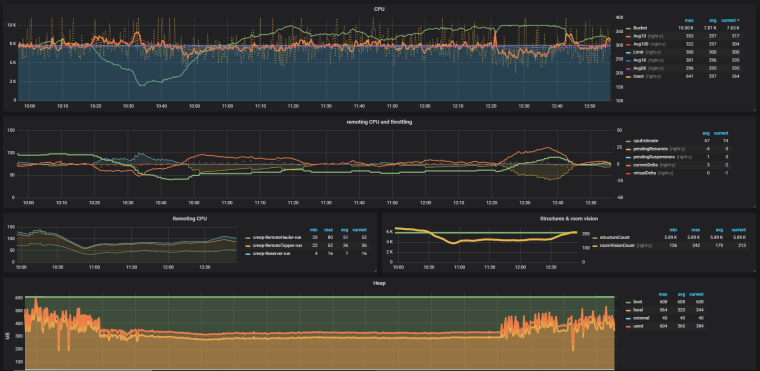

Things had been running a bit better since the last heap increase but today, or just now, a shift happened in the heap which is murdering my CPU.

The downword peak in heap shortly after 14:05 is a code upload to reset IVM. Before this I dropped ~15 cpu worth of remotes, currently I am at ~30 cpu less remoting and only now it starts to somewhat recover.

If the server was reconfigured to improve things, please consider reverting...

-

@tun9an0 The only change today was increasing heap limit by ~30%. Some side effects in your code probably reacting on the increased heap size?

-

@artch Only interaction I have with heap is doing gc(true) when the heap gets to 85% use to avoid memory limit resets. I have not made any changes to my workload before the above drop in bucket. Apart from the heap getting bigger the local use seems to have shifted with about 200mb also, I have no idea how I could influence that... Getting a 30% increase with the local use staying where it was would be very liberating

-

What I am currently running into is the heap for some reason starting to flatline after awhile. Unfortunately this is with 25~35 reduced CPU.

Uploading code at 12:21 restores the more variable heap behavior and CPU performance. It seems like IVM starts to do full GCs within every tick at some point..

@artch It could help if we could cleanly reset our IVM through code..

-

There's a current PR for it here https://github.com/screeps/driver/pull/30 by @The_General. if there were a cooldown on it, i feel like it could be a viable solution. would a 1-5k tick cooldown between calls work? im sure it would not be too difficult to prevent it from being called so frequently it negatively affects tick times and the back end...

-

I am seeing the same thing as descirbed by @TuN9aN0. After the heap limit was increased 6 days ago the heap stats started "flatlining" and cpu costs increased. Basically ive not had anything in my bucket since the increased limit.

The only code i have that reacts to the heap limits is my own garbage functions that runs at certain thresholds of the heap size, deleting certain cached objects once the limits are approached. I guess its possible that my caches are getting too big, i will attempt to clear them also after a certain global age has been reached in addition to the heap limits approached and see if this can help.

-

I also tried resetting my own caches to recover, no measurable effect, flatline persists..

-

Yeah - I "flatlined" for about 10 hours overnight. It cost me ~25 CPU/tick. This was CPU my code never saw: it was used either before the start or after the end of my tick.

Restarting the VM fixed it.

-

What i am observing is an increased general cost for all my functions when the heap is "flatlining". Calling my own gc functions had no effect, same as calling the offical gc(). Only a code upload resets the heap for me.

-

Could that be some gc death loop?

Where it tries to remove garbage that somehow links to it self until it decides to give up and wait for the next tick?