Clarification request on "Cpu limit abusing"

-

There are two types of user scripts resets. In most cases, it happens using the

timeoutoption invm.runInContextmethod. It implements the watchdog timer internally, and is able to gracefully stop the script execution exactly when we request. This situation is perfectly fine. Let’s call it a “soft reset.”However, in some cases and some kinds of workload (e.g. running a heavy native function like

JSON.parse, or some types of infinite loops), this timer doesn’t fire, it cannot stop the script immediately. In this case we allow the script to spin for 1000ms (not 500ms!), and if even this time is not enough to finish, then we force restart the entire runtime process. It’s a “hard reset.” And when this happens often, or even every tick for some player (if he has some code inflicting that every tick), it’s starting to have serious impact on overall performance, since many user scripts are being reset constantly at this node.We cannot avoid this due to Node.js architecture (if you have any idea how this can be done, please share). This is why we decided to penalize users for such behavior a little bit, and encourage them to investigate their own code in order to prevent such hard resets in our system.

In order to clarify this further, we have now changed the error message a bit. When you reach your CPU limit and it causes a soft reset, you will get this message as usual:

Script execution has been terminated: CPU limit reachedThis is OK, no consequences for you, you can do that every tick safely.

But when you cause a runtime hard reset it will be like this:

Script execution has been terminated with a hard reset: CPU limit reachedAfter this, you will be penalized for some ticks with this message:

Your script is temporary blocked due to a hard reset inflicted to the runtime process. Please try to change your code in order to prevent causing hard timeout resets.All these changes are now pushed (but not deployed yet) to private servers also, see this commit.

-

So this could have happened due to a very long running GC (seems to be the root cause, as I haven't had a single one after this one).

I already hard-limit my own scripts when bucket the bucket is empty (for whatever reason).

"Stopping" my own script is smarter because it only takes 30 CPU, meaning 270 CPU goes back to the bucket,

Thanks a lot for the clarification.

It looks logical to kill off and hold off the process for 5 ticks, else the user could create while(true) loops to hog the server every tick.

My suggestion would be to check if the script of the player is doing this on regular basis, if it does block it off like you did before. Not after 1 tick of something locking up.

-

So this could have happened due to a very long running GC (seems to be the root cause, as I haven’t had a single one after this one).

GC is unlikely to run for 500-1000ms actually, it is usually less than 200ms.

My suggestion would be to check if the script of the player is doing this on regular basis, if it does block it off like you did before. Not after 1 tick of something locking up.

Yes, it is the next step we’re going to implement later.

-

> We cannot avoid this due to Node.js architecture (if you have any idea how this can be done, please share). This is why we decided to penalize users for such behavior a little bit, and encourage them to investigate their own code in order to prevent such hard resets in our system.

I'm currently researching this. It might be possible with an extension, but that might degrade performance even more. Penalizing players who have infinite loops is, in my eyes, a better solution. If 10 people on the same node do the same thing you will have tick times of over 10 seconds, we can't have that! If I find anything I'll report back in a support ticket.

> GC is unlikely to run for 500-1000ms actually, it is usually less than 200ms.

This lines up with my findings as well, most hits add 2~20 CPU (80% of the GC's), the larger ones seem to be ranging from 80~150 CPU (19-ish% of the GC's). Luckily, we get a bucket for this!

As far as I know I don't have any infinite loops anywhere in my code and am suspecting a perfect storm of different events (high JSON parsing costs + gc + json serialize + shitton of creep actions). It has only happened once in a few million ticks, nothing to worry about, it was just confusing as I never saw it before.

> Yes, it is the next step we’re going to implement later.

Awsome!

-

This makes a lot of sense to me, and to be honest I wouldn't have an issue if you lowered the hard reset timeout down to something like 600ms, especially if it means tick speed might increase as a result.

I definitely appreciate the explanation and improved documentation around this, as it really helps understand the issue. I'd like to suggest you also update the page describing the game loop so new players are likely to see this information sooner.

-

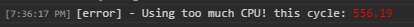

Sometimes this happens as well:

This is presented when something happened during some method (60 CPU action somewhere causing the 500 limit to be exceeded):

This is presented when something happened during some method (60 CPU action somewhere causing the 500 limit to be exceeded):How much is 1 CPU nowadays? Is it still 0.5 ms? If so, 600 ms seems reasonable.

-

Is there a way to include the call stack in the timeout message?

-

I wouldn’t have an issue if you lowered the hard reset timeout down to something like 600ms, especially if it means tick speed might increase as a result.

600ms (500+100) might be not enough for some GC runs.

How much is 1 CPU nowadays? Is it still 0.5 ms?

1 CPU is always equal to 1 ms.

Is there a way to include the call stack in the timeout message?

Unfortunately, no, there is no way to determine at what line the underlying script has been interrupted exactly.

-

According to my GC timings, a max hit I once had was 180something ms.

Based on this I'd suggest the hard limit being the max amount of CPU a user can use that tick + 180.

The new max will become 680ms if your bucket is full

If a player has 10 CPU, and drained his bucket he should get a max of 190 CPU.

Another suggestion would be to scale bucket usage per GCL (10 CPU can max use 20 CPU etc) but that's a whole different discussion, and has the same issues with GC.

-

I've seen this quite a few times in the last days as well , even though my code is "supposed" to also stop when Game.cpu.getUsed is getting too close to Game.cpuLimit.

It's really hard to investigate those when you have a few a day (eg, it's not something easily reproducible) so, as bonzai mentioned it would be wonderfull to have a bit of a hint (dump or whatever).

-

I've just got a new one which i don't know what to do with

Script execution has been terminated: Unknown system error

-

This happens when the server stops functioning for some reason. There is nothing you can do about that one.

-

I'm getting this too.

I frequently go 5 CPU above limit when my creeps repath.

It's getting annoying

-

The only loops I have are for in loops

-

This is happening to me rather frequently (several times a day), and after some looking into it, it only appears to happen on ticks where I call Game.market.getAllOrders().

I've read elsewhere that this is a poorly-performing server-side function and as a result have set it up to only fire when 1) I don't have market data cached to a global, or 2) I am at max bucket and can afford the CPU hit to refresh the data. However, it seems silly that calling this just once can hit the CPU limit, even if you cache it and don't call it for minutes at a time, and is making me reconsider using market at all.

-

@roncli, the market orders thing was actually fixed:

http://support.screeps.com/hc/en-us/articles/212557785-Changelog-2016-09-30

I have received this notification about 7 times since 22'nd of Januari. It might be related to your code which might have an infinite loop in an edge case.

also artem

Your script is temporary blocked due to a hard reset

Your script is temporar>l<y blocked due to a hard reset

-

"temporarily"

If the CPU whole is greater than the sum of its CPU parts, then I could take it resource-by-resource... is that right, though? I would figure grabbing the whole thing at once then grabbing it mineral by mineral would be faster.